In this article, we’ll explore the different Deep Learning algorithms that can be use and discover their nuances.

In Deep Learning, there are various algorithms for solving different objectives.

Nevertheless, it’s not easy to distinguish these algorithms. Whereas in traditional Machine Learning, the different algorithms are easily identifiable, this is not the case in Deep Learning. To help us in this task, there is an important property to consider.

Once we’ve explored this property, we’ll understand how to differentiate between Deep Learning algorithms. Then we’ll discover the 5 main algorithms in the field.

Differentiate Deep Learning algorithms

An important property

In traditional Machine Learning, when the ML Engineer is faced with a problem, he has to select an appropriate algorithm (decision tree, SVM, KNN, etc.). These algorithms have been developed by experts and are available in a programming library.

Once an algorithm has been chosen, the ML Engineer experiments to determine the optimum parameters for solving the problem.

The ML Engineer thus selects an algorithm which he must then optimize.

However, Deep Learning works differently. Libraries do not offer predefined algorithms.

The approach is to build algorithms manually. To do this, the ML Engineer has layers at his disposal, which he can combine.

A Deep Learning algorithm, also known as a neural network, is an assembly of layers.

There are many layers, each producing different effects. A neural network can have dozens or even hundreds of layers. As a result, the construction possibilities are infinite.

These infinite possibilities mean that Deep Learning algorithms have no fixed structure. Consequently, they are not as clearly identifiable as traditional Machine Learning algorithms.

In Deep Learning, the ML Engineer doesn’t have to select an algorithm from a predefined set, but manually builds an algorithm by selecting layers and combining them.

Differentiating Deep Learning algorithms through layers

Although there are no predefined Deep Learning algorithms, they can be differentiated by their constituent elements: layers.

Layer in Deep Learning is the main element used to build a neural network.

A neural network is a stack of layers. Layers contain neurons that transform input data into output data. As neurons are not the focus of this article, we’ll not dive into this subject here.

Deep Learning libraries provide predefined layers that the ML Engineer can select. Each layer has a different effect on the data.

For example, convolution layers are specialized for processing images. So, to solve a Computer Vision project, we would use a neural network composed mainly of convolution layers.

To categorize neural networks, we can then observe the main layers of which they are composed. Thus, layers are predefined elements that enable us to differentiate Deep Learning algorithms.

Artificial Neural Networks – ANN

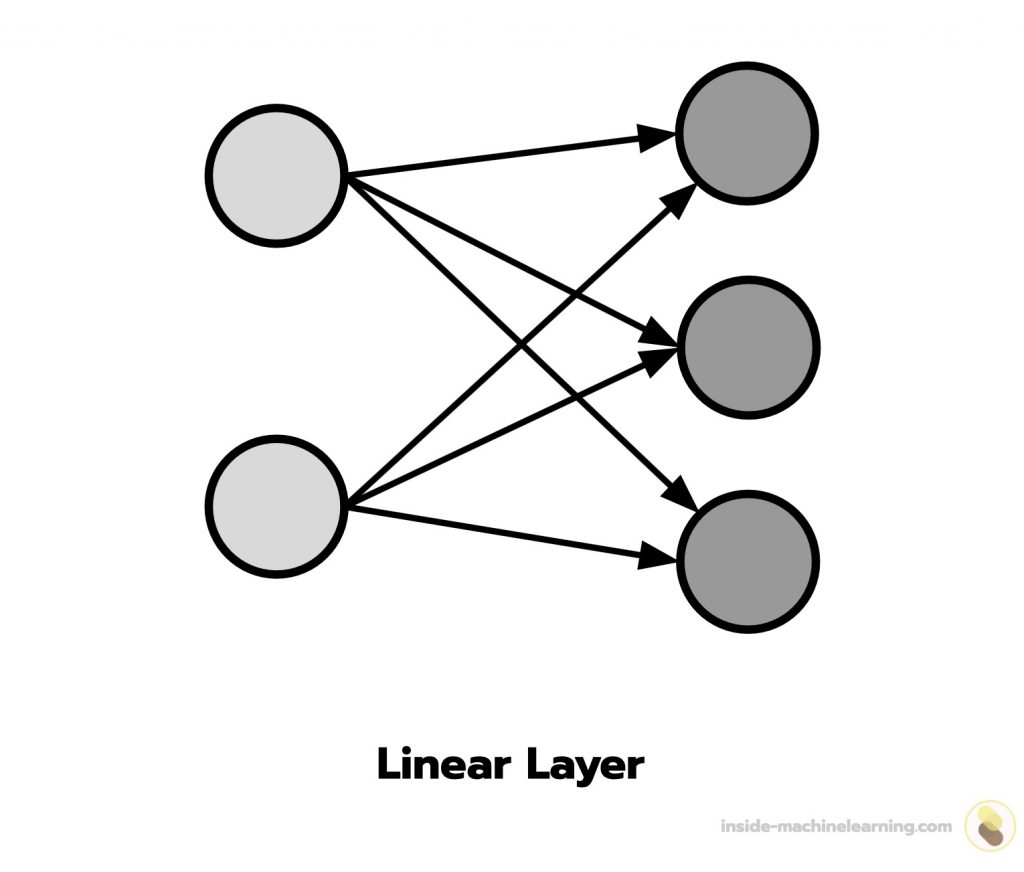

An artificial neural network is made up of linear layers.

In a linear layer, each neuron connects to all the neurons in the previous layer. This allows information to propagate throughout the entire network.

The functioning of an artificial neural network is inspired by the human brain.

In the human brain, when information is perceived, it is sent to neurons. The neurons then successively exchange this information and process it. Thanks to this processing, the brain can interpret the information and initiate a reaction.

For example, when we listen to bird song, our ears transmit this information to the brain’s neurons. The neurons then communicate the information to each other for processing. At the end of this process, the brain understands the information and triggers a reaction: for most people, a feeling of tranquility.

In an artificial neural network, this information processing enables Deep Learning algorithms to outperform most traditional algorithms. Artificial neural networks are mainly used for classification and regression tasks.

Artificial neural networks are based on the way the brain works. They feature linear layers that allow information to propagate throughout the entire network.

Note: an activation function is added to linear layers to enable them to perform nonlinear transformations. This property underpins the performance of Deep Learning algorithms. Find out more in this article.

Convolutional Neural Networks – CNN

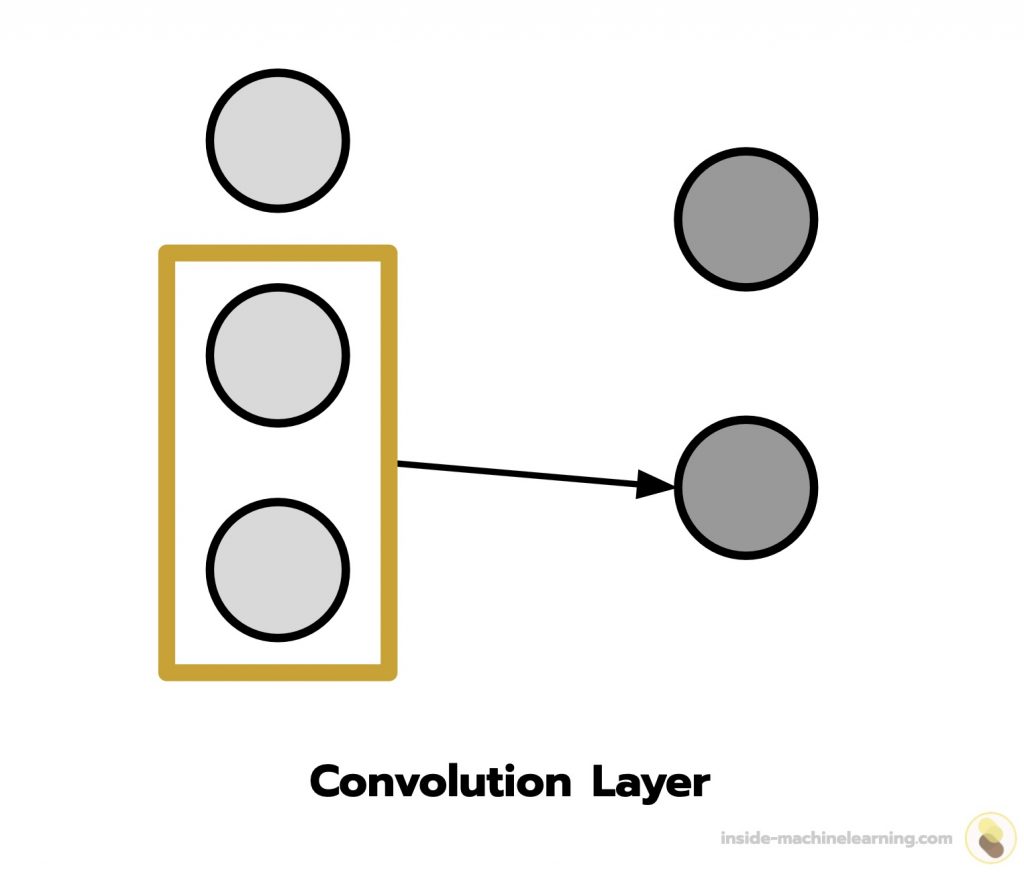

A convolutional neural network is made up of convolution layers.

In a convolution layer, neurons process information by decomposing it into subgroups. This enables the layers to extract relevant features from the data.

The functioning of convolution layers is inspired by the visual system of cats.

To analyze information received by the eyes, the cat’s visual system doesn’t analyze all the information at once. Instead, each neuron concentrates on a small area, enabling the visual system to understand the overall information.

For example, when a cat observes a bird, each neuron concentrates on specific details such as the wings, head, legs, etc. Combined, this information enables the cat’s visual system to form a complete perception of the bird.

In a convolutional neural network, this information processing greatly improves the understanding of images and videos. Convolution neural networks are therefore mainly used for computer vision tasks.

Convolution neural networks are based on the visual system of cats. These networks feature convolution layers, enabling information to be processed by decomposing it into subgroups. This feature is particularly effective for image and video processing.

By the way, if your goal is to master Deep Learning - I've prepared the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :

Now we can get back to what I was talking about earlier.

Recurrent Neural Networks – RNN

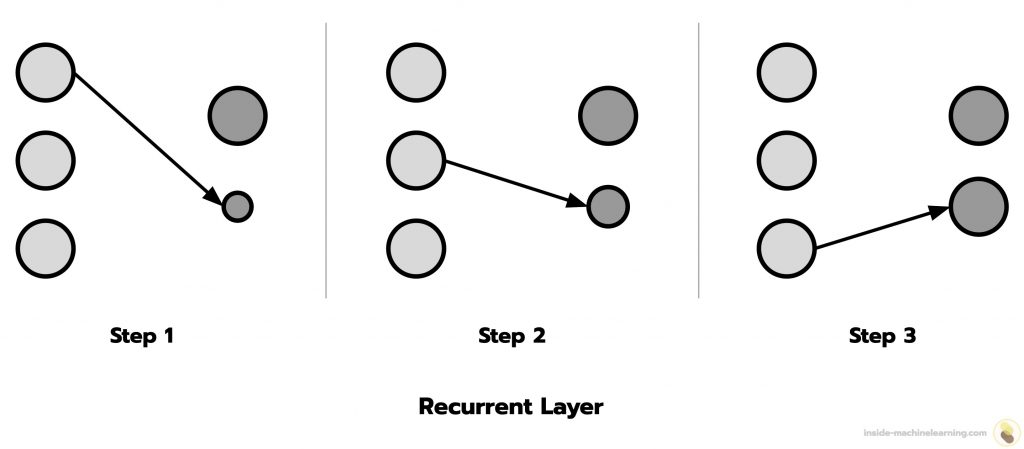

A recurrent neural network is made up of recurrent layers.

In a recurrent layer, the neurons process the elements of information in succession and accumulate the results. This enables the neurons to analyze new elements based on the analysis of previous elements.

The way recurrent layers work is inspired by memory.

Memory has the capacity to record, store and subsequently use information. Thanks to these capacities, we can not only learn new knowledge, but also interpret new information based on previously stored information. In this way, the brain can accumulate knowledge and reuse it in other contexts.

For example, when reading a detective story, the memory records and stores information about the characters, events and plot. As the story progresses, the brain analyzes new information in the light of previous ones. In some cases, this accumulation of information can enable us to guess the identity of the culprit before the final denouement.

In a recurrent neural network, this information processing greatly improves text processing and, in general, sequential data processing. Recurrent neural networks are therefore mainly used for Natural Language Processing tasks.

Recurrent neural networks are based on the way memory works. These networks feature recurrent layers, enabling successive processing of information elements and accumulation of results. This feature is particularly effective for text processing.

Generative Adversarial Networks – GAN

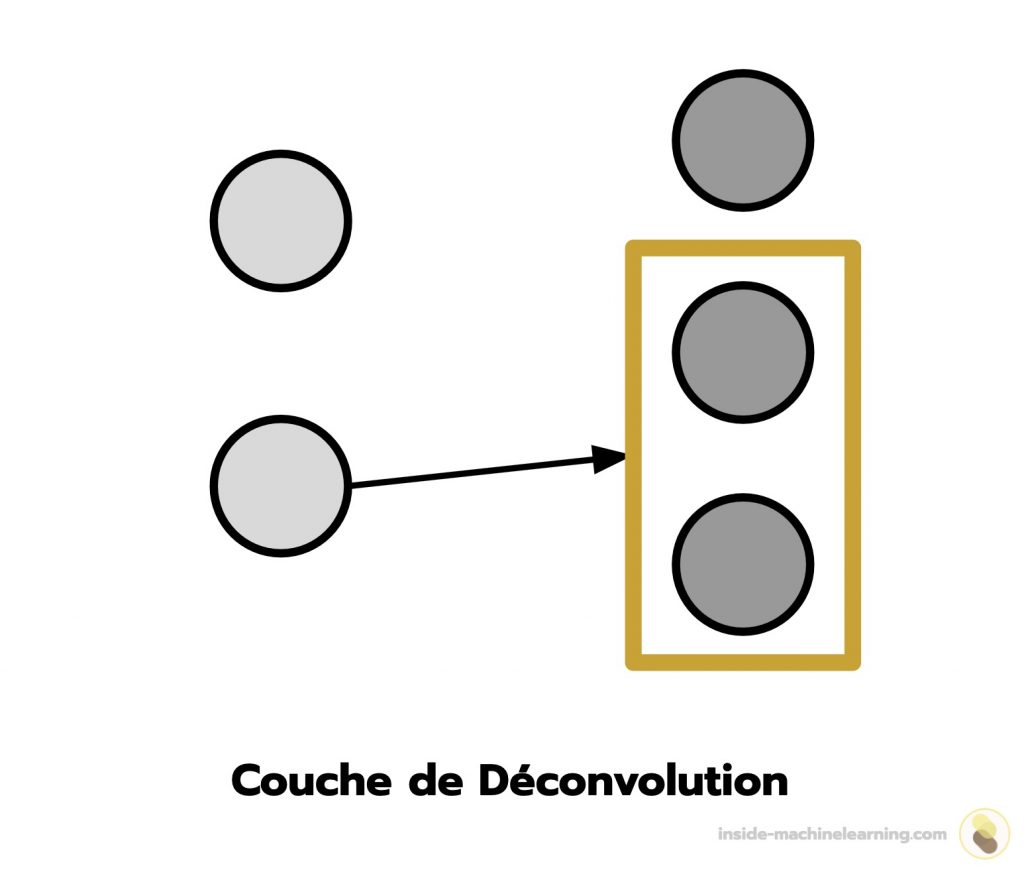

A generative adversarial network is the assembly of two networks: the generator, composed of deconvolution layers, and the discriminator, composed of convolution layers.

In a deconvolution layer, neurons process pieces of information by increasing their size. This enables the neurons to generate information.

Note: refer to convolution neural networks for an explanation of deconvolution layers

Generator can be thought of as a counterfeiter trying to produce fake money and use it undetected.

Discriminator, on the other hand, can be seen as a policeman trying to detect fake money.

The generator’s aim is to fool the discriminator, and the discriminator’s aim is to detect counterfeits among genuine and artificially generated items.

The generator and the discriminator are therefore in competition, which drives them to improve each other. When counterfeits become indistinguishable from genuine items, the result is considered satisfactory.

In a generative adversarial network, this approach greatly improves the generation of information, usually images but also text. For example, generative adversarial networks are used in Computer Vision and NLP tasks.

Generative adversarial networks use a counterfeiter-police approach. The generator imitates items that the discriminator must detect among a group of items. This approach is particularly effective for image and text generation.

Transformers

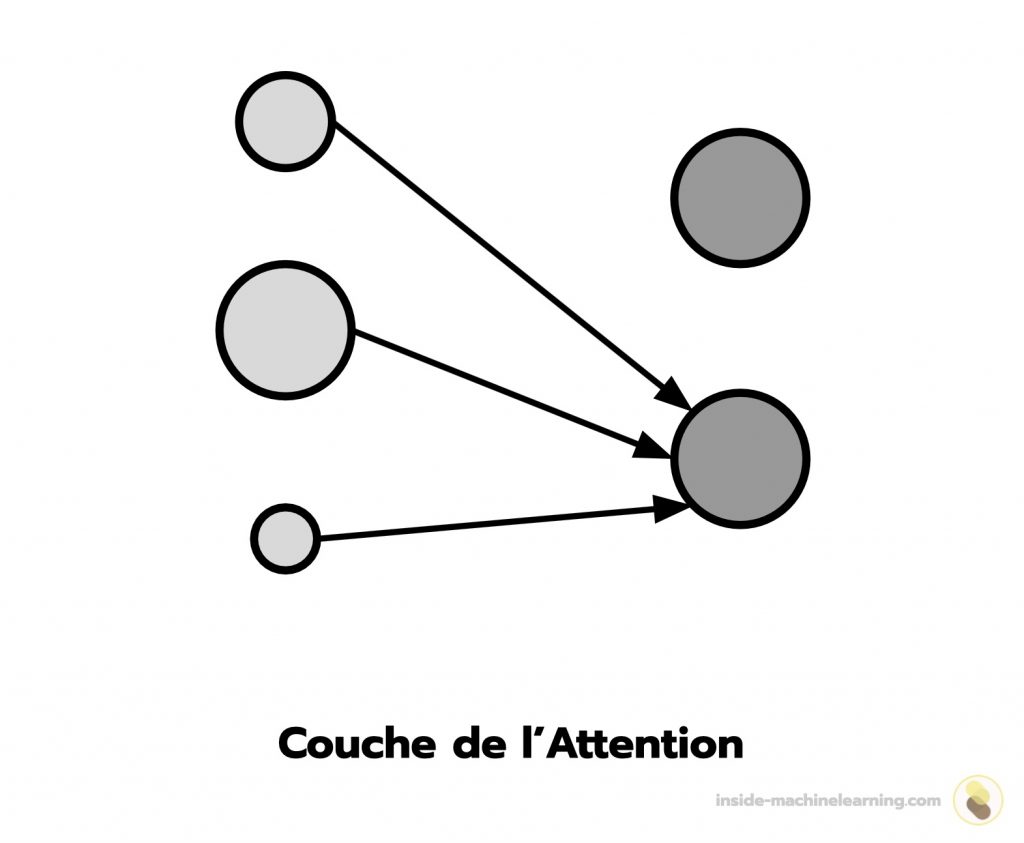

A transformer is made up of layers of attention.

In an attention layer, neurons assign levels of importance to different input elements, according to their relevance. This enables the layer’s attention to be focused on the most significant parts of the information.

The functioning of the attention layers is inspired by the mechanism of selective attention.

Selective attention is the human brain’s ability to assign a level of relevance to the stimuli it receives. This mechanism enables it to focus on important information while filtering out irrelevant or distracting stimuli.

For example, during a discussion in a busy café, when the sounds of conversation, dishes and music are intermingling, a person may direct his selective attention to the voice of his friend nearby. His brain filters out other surrounding voices and sounds, relegating them to a lower level of relevance, in order to focus on the conversation that interests him. This capacity for selective attention enables the individual to concentrate on his friend’s voice while eliminating superfluous distractions, thus facilitating communication and understanding in a stimulating environment.

In a transformer, this mechanism greatly enhances information processing, whether for images or text. Transformers are used in Computer Vision and NLP for classification and regression tasks, as well as for generation.

Transformers use an approach based on selective attention. This enables neurons to assign a level of importance to elements of an information. This greatly enhances the processing of all types of data: images, text and much more.

Conclusion – Deep Learning algorithms

Creating categories for Deep Learning algorithms is more difficult than for traditional Machine Learning algorithms. This is because neural networks are built manually by the ML Engineer. As a result, the number of variations in algorithm structures is potentially infinite.

Nevertheless, Deep Learning algorithms can be differentiated by the main layers of which they are composed. Here are the major Deep Learning algorithms:

- Artificial neural networks

- Convolution neural networks

- Recurrent neural networks

- Generative adversarial networks

- Transformer

Now, if you’re interested to continue deepening your knowledge in the field of Deep Learning, you can access my Action plan to Master Neural networks.

A program of 7 free courses that I’ve prepared to guide you on your journey to master Deep Learning.

If you’re interested, click here:

sources :

- Pubmed – The effect of different types of music on patients’ preoperative anxiety: A randomized controlled trial

- Wikipedia – Convolutional Neural Network

- Wikipedia – Hopfield Network

- Arxiv – Generative Adversarial Networks – Ian J. Goodfellow & al.

One last word, if you want to go further and learn about Deep Learning - I've prepared for you the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :