In this article, I’ll show you how to use a Random Seed with TensorFlow to achieve reproducible results with your model.

Unlike traditional Machine Learning, training a Deep Learning model is a highly complex process.

In this process is used randomness, among other things, to initialize neuron weights.

This random aspect of Deep Learning is an obstacle to the control and reproducibility of results.

However, thanks to random seed (used appropriately), it is possible to overcome this obstacle and obtain stable results!

What is a Random Seed?

A random seed is a value used in certain algorithms to simulate randomness. In IT, this value is crucial for initializing a process that will produce sequences of numbers.

In other words, the random seed is used to obtain a “random” sequence of numbers.

This seed is generally determined as a function of the current time. However, the developer may decide to give a value to this random seed to control the process.

If the same seed is used in an algorithm, the sequence of numbers generated will always be identical.

For example, if a video game uses a random seed to determine the contents of a chest, two players using the same seed will obtain an identical treasure, despite the “random” nature of the process.

The random seed in computer science is a crucial concept in ensuring the reproducibility and control of processes. It gives developers and researchers control over algorithms.

As a result, although some algorithms may appear random, their process can actually be controlled. Such is the case with Deep Learning algorithms.

Why use reproducible results when training Deep Learning models?

In Deep Learning, a random seed can be used, for example, to initialize the weights of a neural network.

The main reason for using a random seed is to guarantee that the results will be reproducible.

In Deep Learning, even slight variations in the initial conditions can lead to very different results. By setting a seed, researchers and engineers ensure that their experiments can be reproduced and validated by others.

This aspect is crucial!

A researcher can obtain promising results with a specific model. But without a fixed seed, it would be extremely difficult for other researchers to reproduce exactly the same training conditions to verify his solution.

The random seed also enables better control of experiments.

It enables scientists to isolate and understand the effect of certain changes in model architecture or hyperparameters.

In addition, different seeds can lead to slight variations in model performance.

Researchers can use this to their advantage, by testing several seeds to find the one offering the best performance.

The use of a seed in Deep Learning model training is essential for reproducibility, control and optimization of experiments.

Let’s take a look at how to set a random seed in TensorFlow in the next section.

How to use a Random Seed when training Deep Learning models

Initialization

In a Deep Learning model, many elements depend on the random seed.

Reproducing the results is then feasible if the initial programming conditions are reproduced.

Therefore, if you want to reproduce my results, I recommend that you use the same programming conditions:

- Environment: Google Colab

- Version:

- Python – 3.10.12

- TensorFlow – 2.14.0

- NumPy – 1.23.5

Having said that, even if your environment isn’t similar to mine, you’ll be able to get reproducible results provided your environment doesn’t change drastically from one test to the next.

By the way, if your goal is to master Deep Learning - I've prepared the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :

Now we can get back to what I was talking about earlier.

Precision: if you don’t change the version of your libraries and of Python between two runs, the results will be reproducible.

To begin with, we’ll determine TensorFlow’s random seed using two functions:

import tensorflow as tf

SEED = 42

tf.config.experimental.enable_op_determinism()

tf.random.set_seed(SEED)tf.config.experimental.enable_op_determinism(): guarantees that TensorFlow operations behave deterministically, i.e. the same inputs will always give the same outputs.tf.random.set_seed(SEED): sets a global seed for TensorFlow. This affects the generation of random numbers in TensorFlow, which is crucial for initializing model weights and other random aspects of training.

Next, we load arbitrary data that we’ll use to train our model:

# Load MNIST dataset

mnist = tf.keras.datasets.mnist

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

# Normalize the input image so that each pixel value is between 0 to 1.

train_images = train_images / 255.0

test_images = test_images / 255.0Next, we define a structure for our Deep Learning model by initializing the weights with our random seed:

# Define the model architecture.

model = tf.keras.Sequential([

tf.keras.layers.InputLayer(input_shape=(28, 28)),

tf.keras.layers.Reshape(target_shape=(28, 28, 1)),

tf.keras.layers.Conv2D(filters=12, kernel_size=(3, 3), activation='relu', kernel_initializer=tf.keras.initializers.GlorotUniform(seed=SEED)),

tf.keras.layers.MaxPooling2D(pool_size=(2, 2)),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(10, kernel_initializer=tf.keras.initializers.GlorotUniform(seed=SEED))

])GlorotUniform can be used to define a seed to ensure that weights are initialized identically at each runtime.

Reproducible results

The model can now be compiled and trained:

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

model.fit(

train_images,

train_labels,

epochs=10,

validation_split=0.1,

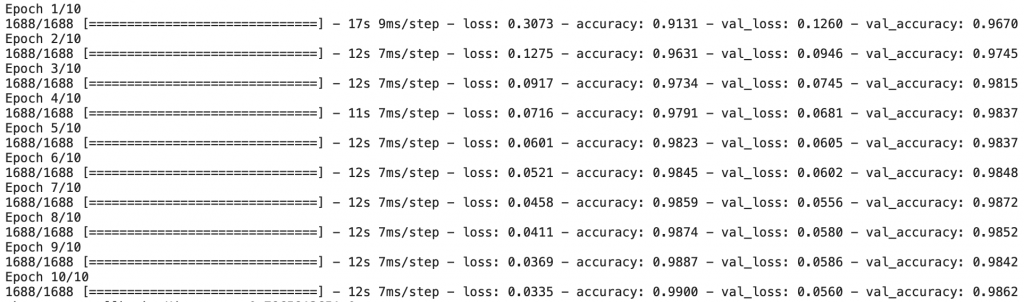

)If you run this code under the same conditions as I did, your results will be identical to these:

Finally, we can evaluate the model’s performance:

_, model_accuracy = model.evaluate(test_images, test_labels, verbose=0)

print('Test accuracy:', model_accuracy)Output:Test accuracy: 0.9821000099182129

Here again, if your execution conditions are the same as mine, you’ll get this score.

In general, whatever your result, if you run all this code again, you’ll get the same results as those obtained in the first run.

Thanks to random seed, we were able to initialize the model weights and obtain reproducible results.

This technique is the key to obtaining stable results and controlling your neural network. But it’s not the only technique you need to know to master Deep Learning.

If you want to deepen your knowledge in the field, you can access my Action plan to Master Neural networks.

A program of 7 free courses that I’ve prepared to guide you on your journey to master Deep Learning.

If you’re interested, click here:

sources:

One last word, if you want to go further and learn about Deep Learning - I've prepared for you the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :