In this article you’ll find everything you need to use YOLOv6: implementation details, understanding of the results and more!

YOLOv6 is the 2022 version of YOLO.

YOLO stands for You Only Look Once. It is a Deep Learning model used for detection on images and videos.

The first version of YOLO was released in 2016. Since then, frequent updates are made with the latest improvements: faster computation, better accuracy.

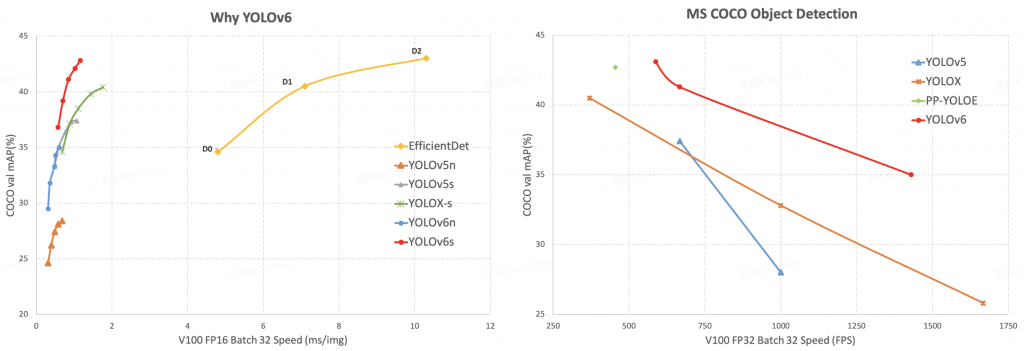

Here are the results of YOLOv6 compared to other versions on the COCO dataset:

Now, let’s see how to use it !

How to use YOLOv6 ?

The following lines of code work for any Notebook/Colab instance. If you want to run YOLOv6 on a terminal/locally, just remove the first “!” or “%” from each line of code.

To use YOLOv6, we first need to download the Github repository!

To do this, we’ll use the git clone command to download it to our Notebook:

!git clone https://dagshub.com/nirbarazida/YOLOv6Then, we place ourselves in the folder we just downloaded:

%cd YOLOv6Next, we have to install all the necessary libraries to use YOLOv6.

Libraries are the following:

- torch

- torchvision

- numpy

- opencv-python

- PyYAML

- scipy

- tqdm

- addict

- tensorboard

- pycocotools

- dvc

- onnx

Fortunately, only one line of code is needed to install all these dependencies:

!pip install -r requirements.txtWe then want to download the weights of the Neural Network.

With the git clone command, we’ve downloaded all the architecture of the Neural Network (layers of the model, functions to train it, use it, evaluate it, …) but to use it, we also need the weights.

In a Neural Network, the weights are the information obtained by the model during training.

You can manually download any version of the weights here and then put the file in the YOLOv6 folder.

Or easily download it with this line of code:

!wget https://github.com/meituan/YOLOv6/releases/download/0.1.0/yolov6n.ptAt the time I’m writing those lines, YOLOv6 has just been released. Updates may occur and the weights may change, as well as the URL of their repository. If the link no longer works, check this Github for the latest version.

One last thing before using the template: upload your image!

Either a single image, or several in a folder (YOLOv6 can handle several images at once).

Feeling lazy ? You can simply upload our test image in one line of code :

!wget https://raw.githubusercontent.com/tkeldenich/Tutorial_YOLOv6/main/man_cafe.jpg

FINALLY, we can use YOLOv6!

To do this, we’ll call the infer.py file in the tools folder.

The python code in this file will run the detection for us.

We only need to specify a few important parameters, in our case :

By the way, if your goal is to master Deep Learning - I've prepared the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :

Now we can get back to what I was talking about earlier.

- weights we are using:

--weights yolov6n.pt - image on which we want to apply the detection:

--source ./man_cafe.jpg

!python tools/infer.py --weights yolov6n.pt --source ./man_cafe.jpgIf you use your own image or folder, just change this last part to ./your_path.

Understand the results

You’ll find the detection output in the folder YOLOv6/runs/inference/exp/

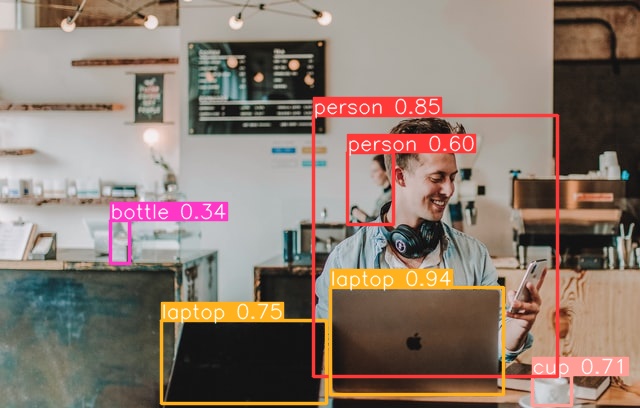

Here is our result :

First of all, we can see that YOLOv6 has a very good detection capacity. It even detects the person right behind our main character.

You can see that it even indicates the confidence with which it detects objects/people. Most obvious objects are detected with a high confidence threshold. But we can see on the left that YOLOv6 detects a bottle. Here the model fails. But it fails with a very low confidence threshold.

It detects with 34% confidence that there is a bottle on the left table. This means that YOLOv6 is not at all sure about this prediction.

Maybe we can simply remove this prediction?

Actually it’s possible when running YOLOv6.

Remember that we have defined some parameters. The mandatory parameters are the weights and the source (image path), but there are also default parameters that you can change to suit your needs.

There are other parameters, for example:

- Confidence threshold: conf-thres (default value: 0.25)

- Intersection over Union threshold: iou-thres (default value: 0.45)

The confidence threshold defines the minimum confidence value at which the detection must be kept.

By default, if a detection has a confidence threshold lower than 25%, it will be deleted.

Let’s change the confidence threshold to 0.35 :

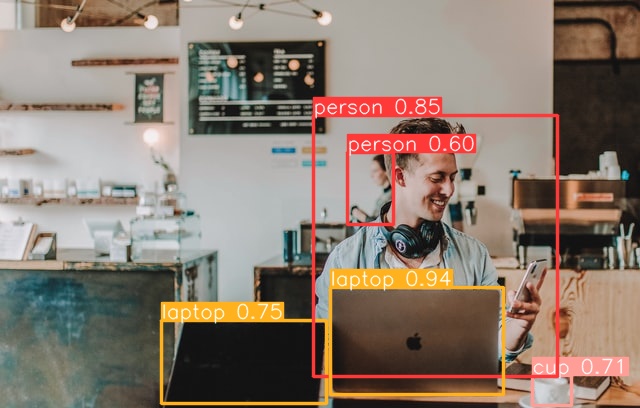

!python tools/infer.py --weights yolov6n.pt --source ./man_cafe.jpg --conf-thres 0.35Result :

Here we see that the bottle has not been kept.

Use YOLOv6, make assumptions for your project and set the confidence threshold that suits your need 😉

Changing weights

Now you understand a bit more what YOLOv6 is.

Let’s go deeper.

There are different versions of YOLOv6 weights.

We have used a large version of these weights.

The large weights mean three things compared to the other versions:

- Better results (or at least a better understanding of the complexity)

- Slower computation speed

- More memory space used

But what if you have space and time constraints?

Well, ou can use other versions of these weights:

- Small: https://github.com/meituan/YOLOv6/releases/download/0.1.0/yolov6s.pt

- Medium: https://github.com/meituan/YOLOv6/releases/download/0.1.0/yolov6t.pt

- Large: https://github.com/meituan/YOLOv6/releases/download/0.1.0/yolov6n.pt

Again, you can download them manually here and then put them into your folder, or use wget and specify the version you want:

!wget weight_file_pathDepending on the weight version you’ve chosen, you will have :

- Small: yolov6s.pt

- Medium: yolov6t.pt

- Large: yolov6n.pt

To use it, replace weight_file here:

!python tools/infer.py --weights weight_file --source ./man_cafe.jpgNow you know how to use YOLOv6!

If you want to know more about Computer Vision, feel free to check our category dedicated to this topic.

See you soon in a next post 😉

One last word, if you want to go further and learn about Deep Learning - I've prepared for you the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :

Hello.

Thank you for the tutorial.

How to save in text option, for voice acting? That is, the answer – directly into the text.