In this article we explain you THE method to never forget the difference between True Positives, False Negatives, True Negatives and False Positives.

These terms define the type of result we can get after a prediction with two possibilities (in Machine Learning we call it binary classification)

They enable to evaluate the performance of our model and know how reliable its predictions are.

Often these terms are confused with each other and that’s why we give you here the technique to remember them !

Why use True Positives / False Negatives ?

To measure the performance of a Machine Learning model, we cannot simply look at the number of well-made predictions.

On the contrary, this approach can give us a distorted view of our model.

Let’s take the example of a choice with two possibilities : we place ourselves at the exit of a tunnel to predict what will come out. We imagine that there are two possibilities here, either it is a car either a motorcycle.

If on this road 80% of the users are in cars and we predict each time that it will be a car that will come out of the tunnel… We will have at the end a success rate of 80%.

This is what we call a naive prediction because there is no real reflection: we don’t predict, we arbitrarily decide that everything that comes out of the tunnel will be a car.

The problem is that this approach works when there are 80% of cars on the road, but if the context changes, this approach will not work anymore.

In fact, this type of prediction is not reliable. Indeed, since our data is biased (there are many more cars than bikes), our performance is biased too.

That’s why a naive approach is not efficient. We need an smart approach.

What we are trying to do in Machine Learning is to have an smart approach, a model that can be reproduced in different contexts (in our case, on different tunnels).

Fortunately for us, there is a performance analysis that allows us to give more importance to smart models than to naive models.

How True Positives / False Negatives work?

This method is called True Positives/False Negatives.

Let’s go back to our tunnel example.

We have here two choices :

- a car comes out of the tunnel

- a motorcycle comes out of the tunnel

The objective here is to measure the performance of our prediction on these two choices.

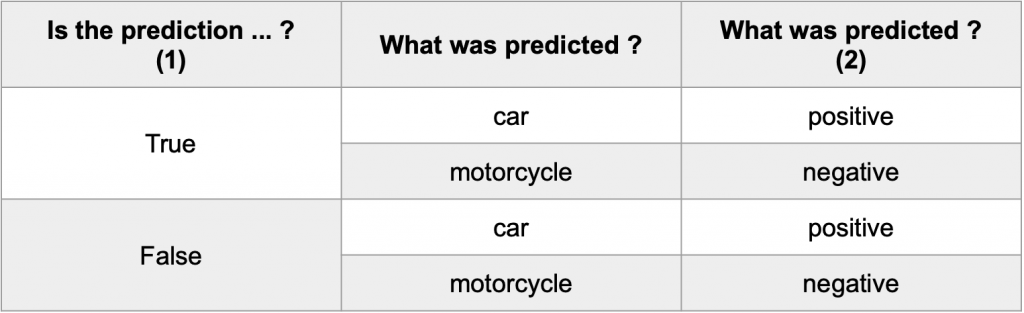

By default, we’ll say that the car corresponds to the “positive” choice and the motorcycle to the “negative” choice.

For each of these choices, either our prediction is true (good) or it is false (bad).

For example, we predict that a car will leave the tunnel. We place ourselves in the “positive” choice.

A car does come out of the tunnel, so our prediction is true. It is a True Positive.

If, on the other hand, our prediction was wrong (a motorcycle comes out of the tunnel), we say that it is a False Positive.

Same thing for the “negative” choice: we predict that it is a motorcycle that will come out of the tunnel.

A motorcycle comes out, so our prediction is true. It is a True Negative.

But if a car comes out, our prediction is false. It is a False Negative.

Finally, we have four possible results :

By the way, if your goal is to master Deep Learning - I've prepared the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :

Now we can get back to what I was talking about earlier.

- a car comes out of the tunnel

- we predicted that a car would come out – True Positive

- we predicted a motorcycle would come out – False Negative

- a motorcycle comes out of the tunnel

- we predicted a car would come out – False Positive

- we predicted a motorcycle would come out – True Negative

Once seen, it is easy to conflate these concepts. That’s why we offer you two methods to remember them easily !

Written method

Here we must keep in mind that :

- car = positive

- motorcycle = negative

Then, for each prediction, we use the sentence “We predicted …(2) and it was …(1)“.

For example, if we predicted that a car would come out of the tunnel, but in the end it was a motorcycle that came out, we would have :

“We predicted positive (2) and it was false (1)“.

We take (1) and (2) which gives us : False Positive.

Said in a more detailed way:

“We predicted that a car (2) would come out of the tunnel and it was ultimately false (1)“.

We take (1) and (2) which gives us False Car but as said at the beginning of the exercise car = positive so, we have False Positive.

The method is therefore to remember this sentence “We predicted …(2) and it was …(1)” and to complete it according to the prediction.

Visual method

Here again, keep in mind the basic assumption is :

- car = positive

- motorcycle = negative

For example : we predicted that a motorcycle will come out of the tunnel and finally it is indeed a motorcycle that comes out.

In this case our prediction is true (1) and we predicted that it was a motorcycle = negative (2).

We take (1) and (2) which gives us : True Negative.

For this method you only need to keep this table in mind and fill in the middle column with the corresponding choices (in our case “car” and “motorcycle”).

To Know

In Machine Learning, in order to display the result of a prediction model with two possibilities (like the example we just saw), we use a confusion matrix.

The confusion matrix is in fact a table in which we display the number of predictions according to each possibility:

| True Positives number | False Negatives number |

| False Positives number | True Negatives number |

You may then ask me : is the confusion matrix actually just a fancy word for “result in table form”?

I would answer that yes, it is. There are people who like to make things complicated for no reason, but it still is a used term in Machine Learning !

Remember that the confusion matrix is a table that displays the results of the model.

To go further and if you want to know more about how to effectively use True Positives and False Negatives you can continue the reading with this post on Recall, Accuracy and F1 Score ! 🙂

sources :

- L. Antiga, Deep Learning with PyTorch (2020, Manning Publications) – our affiliated link

- Photo by Ryan Millsap on Unsplash

One last word, if you want to go further and learn about Deep Learning - I've prepared for you the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :