Today, we’re diving into the fascinating world of Retrieval-Augmented Generation, commonly known as RAG, in deep learning.

This isn’t just another tech buzzword; it’s a groundbreaking concept reshaping how AI systems understand and interact with the world around them.

Think of it as giving AI a supercharged brain, enabling it to pull information from a vast pool of knowledge and use it to make more informed, accurate decisions.

Whether you’re a deep learning enthusiast or just curious about the latest in AI, understanding RAG is crucial in grasping where artificial intelligence is heading.

So, buckle up and let’s embark on this exciting journey into the world of RAG!

Understanding the Basics of RAG

Before we get into the nitty-gritty, let’s break down what Retrieval-Augmented Generation really means. In the simplest terms, RAG is a cutting-edge approach in deep learning that combines the power of information retrieval with the finesse of language generation. Traditional language models, like the ones that power your favorite chatbots, generate responses based on their training data. They’re like students who’ve crammed for an exam; they can only recall what they’ve studied.

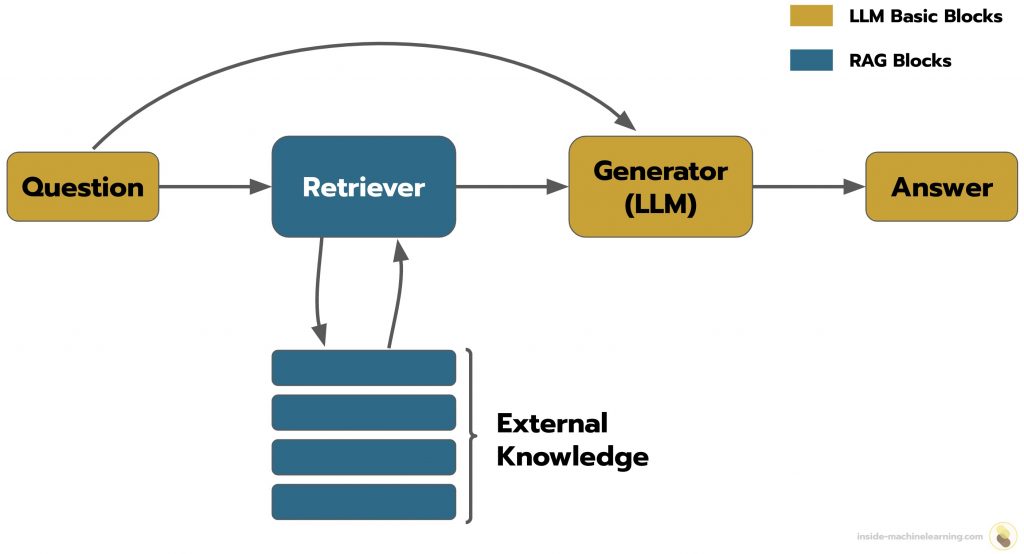

RAG, on the other hand, is like a super student with internet access during the test. It doesn’t just rely on what it’s been fed during training. Instead, it actively retrieves information from external data sources to enhance its responses. This is where the “retrieval-augmented” part comes in. It’s not just generating text; it’s pulling in relevant information from a vast corpus of data to make its output more accurate and contextually rich.

Here’s a simple analogy: imagine you’re writing an essay. A traditional language model would be like writing from memory, relying on what you already know. RAG is like having the ability to look up information on the fly and incorporate it into your essay, making it more informative and well-rounded.

But RAG isn’t just about accessing a treasure trove of information; it’s about doing so smartly. It uses sophisticated mechanisms to sift through data, identify what’s relevant, and then seamlessly weave that information into its responses. This ability to dynamically integrate external knowledge makes RAG models incredibly powerful, especially in tasks requiring deep understanding and contextual awareness.

How RAG Works: A Technical Overview

The Mechanics Behind the Magic

Now, let’s peek under the hood of RAG and understand the technical wizardry that makes it tick. At the heart of RAG lies Large Language Models (LLMs), which form the backbone of this advanced AI system. But what sets RAG apart is how it interacts with these LLMs, employing a series of intricate processes from data sourcing to final output.

Step 1: Gathering the Brain’s Food – Source Data

First things first, RAG needs food for thought, which comes in the form of source data. This data, ranging from text documents to web pages, serves as a knowledge reservoir. The more diverse and high-quality this data is, the smarter our RAG system becomes. Imagine feeding it the entire Wikipedia – that’s the level of knowledge we’re talking about!

However, it’s not just about quantity; quality plays a huge role too. The data needs to be accurate, up-to-date, and free from redundancy. Think of it as preparing a healthy meal for the brain; the better the ingredients, the better the brain functions.

Step 2: Chopping it Down – Data Chunking

Next, we chop this vast data into manageable pieces, a process known as data chunking. This makes it easier for RAG to scan through the information and pick out relevant bits efficiently. These chunks can be as big as entire documents or as small as paragraphs or sentences. The key is to make them digestible for the model.

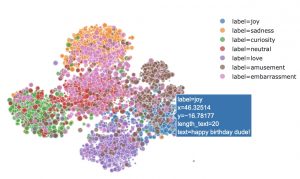

Step 3: Converting Text into AI Language – Text-to-Vector Conversion

After chunking comes the translation phase. Our RAG model can’t understand raw text as we do. So, it converts this text into a language it comprehends – mathematical vectors. This process is known as embedding, where text is transformed into numerical values encapsulating the context and semantics.

Step 4: Creating the Link

The final step before generation is linking these vectors back to the source data. This link is crucial; it ensures that the retrieval model fetches the most relevant information, which in turn informs the generative model to produce meaningful text. It’s like the final piece of a puzzle that completes the picture.

With these steps, RAG becomes a unified system, capable of searching, selecting, and synthesizing information in ways traditional models can’t. This hybrid architecture is what gives RAG its edge in generating superior, context-rich, and factually accurate text.

Benefits of Using RAG in AI Applications (300 words)

RAG: The AI Game-Changer

Imagine an AI that not only answers your questions but also provides the most up-to-date, contextually relevant responses. That’s what RAG brings to the table. Let’s explore some of its standout advantages:

By the way, if your goal is to master Deep Learning - I've prepared the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :

Now we can get back to what I was talking about earlier.

- Enhanced Contextual Understanding: RAG models don’t just spit out pre-learned responses. They comprehend the context of each query by fetching relevant information from external sources. This results in answers that align seamlessly with the specific context of your input, making interactions much more accurate and meaningful.

- Updatable Memory: One of RAG’s coolest features is its dynamic memory. Unlike traditional models that need extensive retraining to update their knowledge, RAG can integrate real-time updates and fresh sources. This means it’s always on top of the latest information, ensuring that its responses are current and relevant.

- Source Citations for Credibility: RAG-equipped models can cite sources for their responses, just like a well-researched academic paper. This transparency builds trust and credibility, allowing users to verify the information provided by the AI.

- Reduced Hallucinations: AI models sometimes make wild, inaccurate guesses, known as hallucinations. RAG models, with their ability to pull in real-world information, are less prone to this issue. They’re more reliable, ensuring the content they generate is both accurate and less likely to leak sensitive information.

With these benefits, RAG is transforming how AI systems function, making them smarter, more reliable, and incredibly versatile.

Applications of RAG in Various Fields (350 words)

RAG: A Versatile Tool Across Domains

The applications of RAG are as varied as they are impressive. Let’s look at how RAG is making waves across different sectors:

- Chatbots and AI Assistants: RAG takes chatbots to the next level. These systems can now provide detailed, context-aware answers by tapping into extensive knowledge bases. This makes interactions with AI assistants more informative and engaging.

- Education Tools: In the educational realm, RAG can significantly enhance learning tools. It allows access to answers and explanations based on textbooks and reference materials, facilitating effective learning and comprehension for students.

- Legal Research and Document Review: Legal professionals can leverage RAG to streamline document reviews and conduct efficient research. It aids in summarizing complex legal documents, saving time and enhancing accuracy.

- Medical Diagnosis and Healthcare: In healthcare, RAG serves as a valuable tool for medical professionals. By providing access to the latest medical literature and guidelines, it aids in accurate diagnosis and treatment recommendations.

- Language Translation with Context: For language translation, RAG offers a more nuanced approach by considering the context in knowledge bases. This results in more accurate translations, especially valuable in technical or specialized fields.

Challenges and Limitations of RAG

Navigating the Hurdles of RAG

While RAG is transformative, it’s not without its challenges. Here’s a look at some of the hurdles encountered in RAG implementation:

- Model Complexity: RAG’s architecture, blending retrieval and generative components, is inherently complex. This complexity demands significant computational resources, making the model more demanding in terms of processing power and harder to debug.

- Balancing Act: The dual nature of RAG – retrieving and then generating text – can impact performance, especially in real-time applications. Striking the right balance between the depth of retrieval and speed of response is crucial, particularly in time-sensitive scenarios.

- Data Preparation: Ensuring the source data is clean, comprehensive, and free from redundancy is a substantial task. Moreover, finding the right embedding model that performs well across a diverse array of information is equally challenging.

Despite these challenges, RAG’s advantages in enhancing AI capabilities are undeniable, making it a field ripe for exploration and improvement.

The Future of RAG and LLMs (250 words)

The Road Ahead for RAG

The future of RAG and Large Language Models (LLMs) is brimming with potential. Here are some trends and developments we can anticipate:

- Advanced Retrieval Mechanisms: Future iterations of RAG will likely feature more precise and efficient document retrieval processes. This will involve sophisticated algorithms and AI techniques focusing on enhancing retrieval accuracy.

- Integration with Multimodal AI: We can expect RAG to merge with multimodal AI, combining text with other data types like images and videos. This synergy promises richer, more contextually aware responses, paving the way for innovative applications across various fields.

- Industry-Specific Applications: As RAG matures, it’s set to penetrate industry-specific applications. For instance, in healthcare, RAG could assist doctors by instantly retrieving the latest clinical guidelines, thereby ensuring up-to-date medical care.

- Ongoing Research and Innovation: The RAG landscape will continue to evolve, driven by relentless research and innovation. This will likely result in more accurate, efficient, and versatile RAG models.

- Enhanced Retrieval in LLMs: Future LLMs might integrate retrieval as a core feature, making them more adept at accessing and utilizing external knowledge sources. This integration will lead to more context-aware and competent LLMs.

Conclusion

In summary, Retrieval-Augmented Generation (RAG) is a game-changer in the realm of AI and deep learning. Its ability to dynamically integrate external knowledge elevates the capabilities of AI systems, making them more informed, accurate, and contextually aware. While challenges exist, the potential applications and future advancements of RAG are vast and exciting. As we continue to explore and refine this technology, RAG stands poised to revolutionize how we interact with and benefit from AI-powered solutions.

sources:

- Datastax.com: “Retrieval Augmented Generation (RAG): A Comprehensive Guide”

- Paperswithcode.com: “RAG Explained”

- NVIDIA Blogs: “What Is Retrieval-Augmented Generation aka RAG”

- Analyticsvidhya.com: “Retrieval-Augmented Generation (RAG) in AI”

Note: Portions of this article may have been written with the assistance of ChatGPT, an AI language model developed by OpenAI.

One last word, if you want to go further and learn about Deep Learning - I've prepared for you the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :