Here, we’ll see how to upload files from a public drive to Google Colab, without access key and without connecting to your drive.

When we do Machine Learning on Jupyter Notebook or Google Colab it’s usual to upload files on our session. One can import them locally when working alone but as soon as one works with several people, the question of loading the data arises.

These data can be very large especially when it comes to pre-trained models or checkpoints.

Many people store these files on Google Drive or other storage platforms. This saves time and space on their local drive.

Python gives us the possibility to upload these kind of files stored on the cloud directly on our session whether they are private, within a team, or public, accessible to all.

No need to download templates yourself, Python does it by itself !

We will see in this article how to do it !

Upload files from your Google Drive folder

Google Colab has a library that allows you to import your own Drive :

from google.colab import drive drive.mount('/content/drive')After executing the code, Python gives us a link to find our access key.

We just have to enter the key in the corresponding field to have access to all the files of our Drive on our session. Field should look like this :

This is an easy solution if you want to access the files on your drive, but what about the files in a public directory that you want to access without having the access key ?

This can be a problem, especially if we work in collaboration with others who don’t have access to our drive’s password or if we want to make public our freshly baked Machine Learning algorithm !

Don’t worry, there is a solution to upload public drive files ! And it even works for big files !

Upload files from a public Google Drive folder

Google Colab allows to use Shell commands like pip, ls or wget… it’s the last one we are interested in 😉

We are going to use wget to load the file from a public directory of Google Drive that we want but before that we need to get the iD of this file.

For that, we need to go to the Drive link where the file is located for example this one.

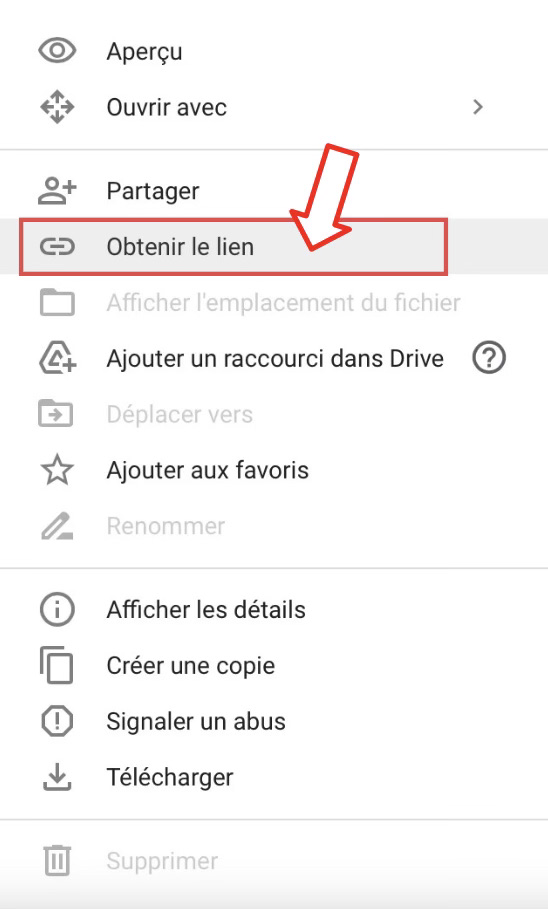

Then make a right click on the file we are interested in and click on Get link, here :

By the way, if your goal is to master Deep Learning - I've prepared the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :

Now we can get back to what I was talking about earlier.

Once done you should get a link like this: ‘https://drive.google.com/file/d/1ML-Rpeaftox3z7b1GThUZtl1Ql_lGUrb/view?usp=sharing’

The iD you need to retrieve is between ‘https://drive.google.com/file/d/’ and ‘/view?usp=sharing’.

In our case the iD is : 1ML-Rpeaftox3z7b1GThUZtl1Ql_lGUrb

Then, depending on the size of the file, we have two options:

With small files

Use the command :

!wget -q --show-progress --no-check-certificate 'https://docs.google.com/uc?export=download&id=iD' -O Nom_fichier_sortieWith :

- iD : the iD of the file

- Output_file_name : the name we want to give the file we downloaded with the associated extension (e.g.: .txt, .png, .pdf, .zip, .tar, … )

In our case:

!wget q --show-progress --no-check-certificate 'https://docs.google.com/uc?export=download&id=1ML-Rpeaftox3z7b1GThUZtl1Ql_lGUrb' -O fichier.tarWith large files

Use the command :

!wget -q --show-progress --load-cookies /tmp/cookies.txt "https://docs.google.com/uc?export=download&confirm=$(wget --quiet --save-cookies /tmp/cookies.txt --keep-session-cookies --no-check-certificate 'https://docs.google.com/uc?export=download&id=iD' -O- | sed -rn 's/.*confirm=([0-9A-Za-z_]+).*/\1\n/p')&id=iD" -O Nom_fichier_sortie && rm -rf /tmp/cookies.txtWith :

- iD: the iD of the file

- Output_file_name : the name we want to give the file we downloaded with the associated extension (e.g.: .txt, .png, .pdf, .zip, .tar, … )

In our case :

!wget -q --show-progress --load-cookies /tmp/cookies.txt "https://docs.google.com/uc?export=download&confirm=$(wget --quiet --save-cookies /tmp/cookies.txt --keep-session-cookies --no-check-certificate 'https://docs.google.com/uc?export=download&id=1ML-Rpeaftox3z7b1GThUZtl1Ql_lGUrb' -O- | sed -rn 's/.*confirm=([0-9A-Za-z_]+).*/\1\n/p')&id=1ML-Rpeaftox3z7b1GThUZtl1Ql_lGUrb" -O fichier.tar && rm -rf /tmp/cookies.txtYou’ve managed to upload a public file to your notebook.

Thanks to this, you’ll surely be able to train a gigantic neural network! 🐼

Today, it’s thanks to Deep Learning that tech leaders can create the most powerful Artificial Intelligences.

If you want to deepen your knowledge in the field, you can access my Action plan to Master Neural networks.

A program of 7 free courses that I’ve prepared to guide you on your journey to learn Deep Learning.

If you’re interested, click here:

sources :

- Medium

- Photo by David Marcu on Unsplash

One last word, if you want to go further and learn about Deep Learning - I've prepared for you the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :