Here, I’ll show you how to install and use cuML on Google Colab to boost the training speed of your Machine Learning models.

Google Colab offers cloud-based notebooks for running Python code.

Mostly used by Data Scientists and ML Engineers, Colab has one drawback: cuML is not installed in the base environment.

cuML, as its name suggests, is a Machine Learning library exploiting the CUDA architecture. It accelerates algorithm training by up to 10 times the traditional speed (compared to sklearn).

ML Engineers therefore have a real need to use this library in Google Colab!

But what is CUDA? Why is sklearn so slow? How does cuML get around this obstacle? And above all, how can you use this library in Google Colab?

That’s what you’ll find out in this article 🚀

What is CUDA?

CUDA is an interface invented by NVIDIA to extend the scope of GPU applications to multiple uses.

Indeed, the GPU (graphics processing unit) is primarily used to optimize the display and rendering of 2D and 3D images. Pleasing gamers, the GPU is now also delighting developers.

NVIDIA’s technology enables GPUs to be used to perform mathematical operations.

For example, in the field of Machine Learning, CUDA can be used to accelerate the training of Artificial Intelligence models. This optimization is achieved by distributing computations across different GPU cores.

When using a GPU, calculations are said to be distributed or parallelized (as they are performed simultaneously).

Compared with traditional CPU programming, CUDA enables parallel execution across cores, greatly speeding up the processing of certain tasks:

- physical simulations

- image processing

- backward propagation in neural networks

- and many others

CUDA is a key technology developed by NVIDIA to harness the power of graphics processing units (GPUs). Thanks to simultaneous operations, it enables complex calculations to be carried out, generally in the scientific or engineering fields.

A major problem with scikit-learn

scikit-learn is a popular Python library for Machine Learning. It offers a variety of tools for classification, regression, clustering and dimensionality reduction.

However, this library suffers from a major limitation: it is unable to take advantage of a GPU.

Indeed, scikit-learn can only be used with a CPU, which limits its speed and efficiency for processing massive datasets or for training complex models.

Unlike scikit-learn, the TensorFlow and PyTorch libraries are designed to integrate easily with GPUs, making them much faster for certain tasks, particularly neural network training.

So, although scikit-learn is a valuable and widely used tool for Machine Learning, its inability to use GPUs represents a significant disadvantage. A disadvantage that another library has managed to avoid – by harnessing the strength of CUDA.

By the way, if your goal is to master Deep Learning - I've prepared the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :

Now we can get back to what I was talking about earlier.

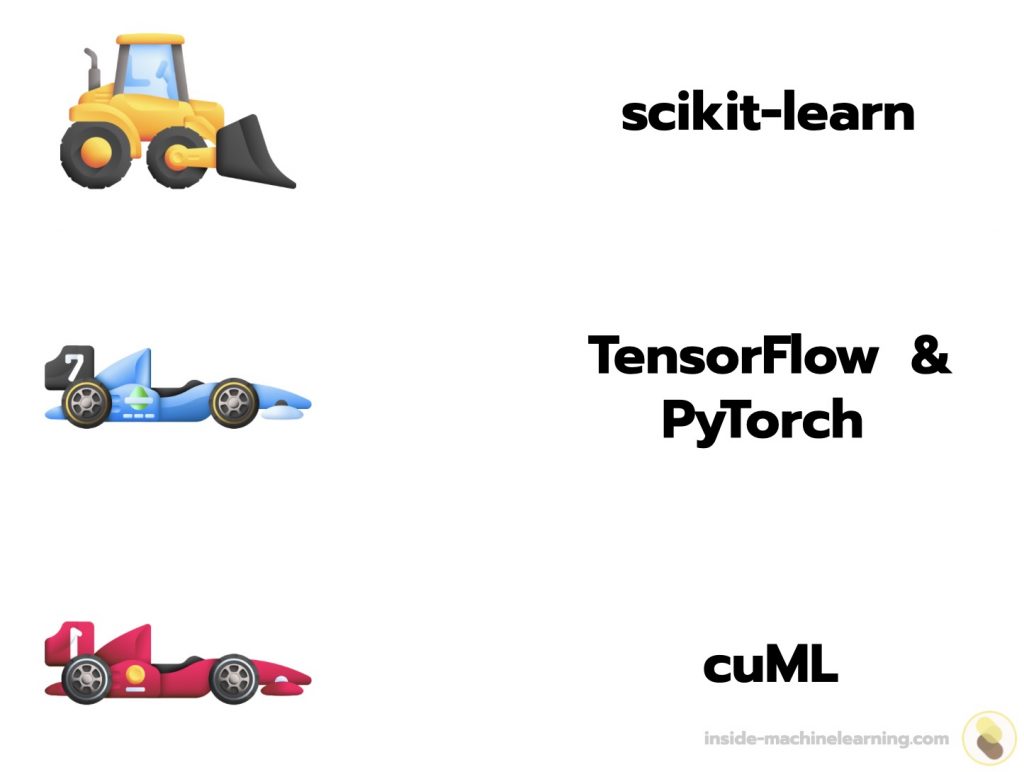

What is cuML?

cuML is a set of libraries for Machine Learning developed by RAPIDS that harnesses the power of CUDA to optimize the training of AI models.

It enables Machine Learning operations to be carried out faster and more efficiently than traditional CPU-based methods.

Let’s take the example of a classification algorithm, such as Decision Tree. Using cuML, this algorithm can be run on a GPU, considerably reducing the time needed for training.

Thanks to cuML, Machine Learning models can be trained on large amounts of data in record time.

Compared with other Machine Learning libraries such as scikit-learn, which are designed for execution on CPUs, cuML is specially optimized for GPUs.

cuML represents a significant advance in Machine Learning, offering increased processing speed and efficiency through the use of CUDA.

This broadens the scope for using Machine Learning models, particularly for applications dealing with large amounts of data.

Tutorial: installing / using cuML with CUDA on Google Colab

Installing cuML on Google Colab

To install the cuML library on Google Colab, you first need to activate the GPU.

Here’s how to do it:

- Go to Runtime.

- Click on Modify execution type.

- Tick T4 GPU.

- Click on Save.

Once the GPU is activated, you can install cuML by executing the following commands in a code cell:

!pip uninstall -y cupy-cuda11x

!pip install --extra-index-url=https://pypi.nvidia.com cudf-cu11 cuml-cu11

!pip install pandas==1.5.3After these steps, cuML should be installed and ready to use on your Google Colab environment.

Note: This code is currently working on November 27, 2023.

Using cuML on Google Colab

To start using cuML, simply import the :

import cumlThen, you can use the library in the same way as you use scikit-learn, but with the advantage of GPU acceleration.

from cuml.ensemble import RandomForestClassifier

X = X.astype('float32')

y = y.astype('float32')

cuml_rand_forest = RandomForestClassifier()

cuml_rand_forest.fit(X, y)Note: to execute this code, you’ll need to select a dataset and create the appropriate X and y variables.

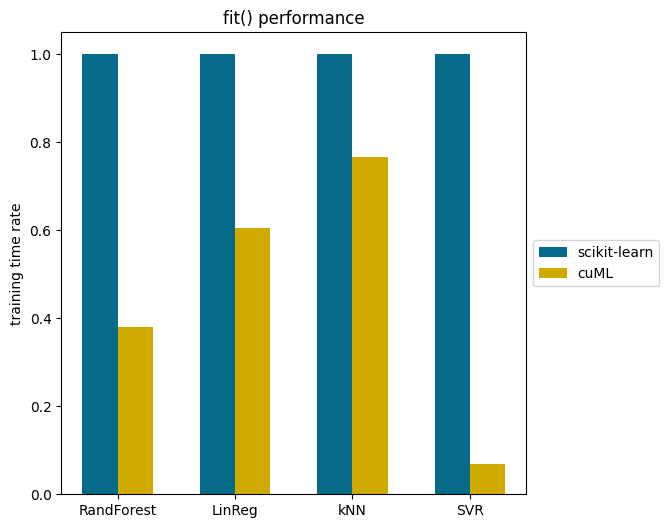

Performance comparison: cuML vs Sklearn

One of the significant advantages of cuML is its execution time compared with equivalent models in scikit-learn.

I personally compared the results of the two libraries’ model execution times. The difference is noticeable. I’ll let you see for yourself on this graph:

Random Forest, Linear Regression, kNN, and SVR models show a considerable time reduction with cuML.

In the case of SVR, cuML can be up to more than 10 times faster!

Machine Learning with cuML is a significant card🃏 to have in your pocket, but today, it’s thanks to Deep Learning that tech leaders can create the most powerful Artificial Intelligences.

If you want to deepen your knowledge in the field, you can access my Action plan to Master Neural networks.

A program of 7 free courses that I’ve prepared to guide you on your journey to master Deep Learning.

If you’re interested, click here:

One last word, if you want to go further and learn about Deep Learning - I've prepared for you the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :