Activation functions might seem complex when beginning Deep Learning. Yet… these 3 things are all you need to understand them !

Deep Learning is a sub-part of Machine Learning.

Its main benefit is the use of neural layers. These layers, put end to end, constitute a Neural Network.

But what is the role of activation functions in this system ?

A simple role

The activation functions are located in each layer of neurons.

Each time data crosses a layer, it gets modified.

In fact, two modifications are applied in each layer:

- the neurons that transform our data

- the activation function that will scan them (normalize them so that their values are not scattered)

Let’s take a simple example:

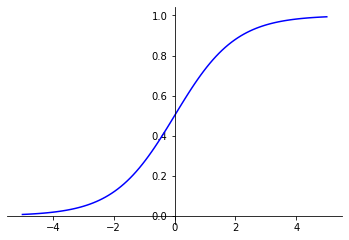

With the Sigmoid activation function, all of our data will be bounded between 0 and 1.

Bounding values between 0 and 1 is referred as normalization.

Normalization is THE technique that will make it easier to learn our model. ✨

But this is not the only use of activation functions!

The heart of the Neural Network

What if I told you that without activation functions no Deep Learning?

Earlier, we saw that two modifications are applied in each layer of the neural network.

These modifications are in fact functions.

So there is the first function applied by the neurons. Then the next function, the one that interests us in this article : the activation function.

It is the activation function that apply real change on data. Why ? Because activation is non-linear.

Indeed, a sequence of several linear functions is mathematically equivalent to a single linear function.

This is why our Deep Learning model would lose efficiency if it used only linear layers.

Therefore, we want our model to be non-linear and that’s why we introduce activation functions.

By the way, if your goal is to master Deep Learning - I've prepared the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :

Now we can get back to what I was talking about earlier.

We have written an article that explains in detail the reason for using non-linear functions. For the most curious, follow this link ! 🔥

The most used functions

Let’s move on to the practical part.

Basically, there are dozens of activation functions. Your only problem will be to choose which one to use.

So, what are the most used activation functions ?

In most projects, three activation are frequently used :

- ReLu, the most popular

- Sigmoid

- Softmax

If you understand these functions, you will have the basics to start Deep Learning successfully ! 🦎

And here is the code to use them with the Keras library:

tf.keras.activations.relu(x)

tf.keras.activations.tanh(x)

tf.keras.activations.sigmoid(x)More activation functions can be found, you can explore them all in this detailed article. And at the end you will find a bonus table summarizing when to use them 😉

sources :

- Quora

- Photo by Pedro Lastra on Unsplash

One last word, if you want to go further and learn about Deep Learning - I've prepared for you the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :