How to extract a table from a website in a single line of Python code ? It’s easy with this Pandas function !

If you work in Data Science, you obviously went through Pandas library !

It’s the standard when you work in Big Data. Pandas allows you to easily manipulate large data sets.

But did you know that you can also extract tables directly from a web page?

Extract a table from a site

Pandas isn’t a simple data manipulation library.

Indeed, it also allows to do Web Scraping : extracting information from web pages.

How ?

You simply have to use the read_html() function by indicating the url of the targeted web page.

This function looks for every tables in a web page and creates a DataFrame for each one of them.

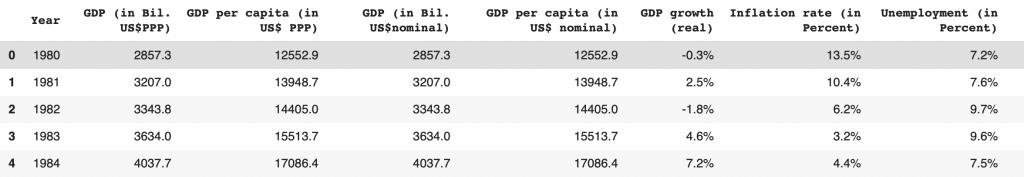

In the example below, we extract information about the economy of the United States on Wikipedia :

import pandas as pd

df = pd.read_html("https://en.wikipedia.org/wiki/Economy_of_the_United_States")Then we can display the result :

df[3]

We have directly a DataFrame containing the table of the Wikipedia page !

To know before using

Notice that we have specified index ‘3’ to display the DataFrame.

By the way, if your goal is to master Deep Learning - I've prepared the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :

Now we can get back to what I was talking about earlier.

Indeed the read_html() function looks for all html tags and extracts the information from all of them.

Thus, we do not only retrieve one table, but all the tables contained in the page.

In our case, the table we are interested in is at index ‘3’.

So feel free to browse the DataFrames returned by the read_html function to understand where your table is located !

Sometimes, it happens that the web pages aren’t up to standard. The extracted data might be corrupted. So expect to do some data cleaning once you call this function.

Fortunately for us, in our example the data was already compliant !

The reason for this is that in the mainstay sites of the internet, like Wikipedia, pages are fully structured.

Pandas library isn’t the only library one allowing to do Web Scraping.

BeautifulSoup is a library specialized in this field and enable extraction of any kind of information on a web page. From tables to unstructured data !

We use it in depth in this article where we analyze Elon Musk’s tweets by Artificial Intelligence.

sources :

- Pandas : read_html()

One last word, if you want to go further and learn about Deep Learning - I've prepared for you the Action plan to Master Neural networks. for you.

7 days of free advice from an Artificial Intelligence engineer to learn how to master neural networks from scratch:

- Plan your training

- Structure your projects

- Develop your Artificial Intelligence algorithms

I have based this program on scientific facts, on approaches proven by researchers, but also on my own techniques, which I have devised as I have gained experience in the field of Deep Learning.

To access it, click here :

Really Useful article

Thanks – perhaps the simplest method.

I tried this with Spark documentation page (https://spark.apache.org/docs/latest/configuration.html).

It’s printing only 1 line of documentation of each parameter. And sometime only few columns.

I must be missing something.